Project Description

Electroacoustic artists and academics have been experimenting with multi-channel audio and spatialization of sound for years. On the other hand, the rock and noise scene has been experimenting with sounds and composition structures for a while, but never much with spatialization.

This project was born after doing a short research in which I tried to find rock artists that used spatialization in their composition. While not completely nonexistent, multi-channel rock or noise pieces or performance are quite rare. It is no secret that meaningful multi-channel compositions and performances are not a simple affair. With that in mind, I explored if it would be possible to create a tool that would enable rock/noise musicians to spatialize sound as they perform.

Materials

- Hardware: Novation Remote Zero SL (MIDI controller), Behringer FCB1010 (MIDI footboard controller), computer, multiple guitar pedals, EBow, Rebel Relic custom guitar

- Software: Max

Introduction

Notable electroacoustic artists are oftentimes classically trained or academics. These characteristics do not intrinsically enable these artists to work in a multi-channel setting, however it does provide them with a thought process that can lead to what is commonly described nowadays as "out-of-the-box thinking".

Edgard Varèse, a French composer whose experiments revolved around "organized sounds" and musique concrète, went on to create Poème électronique. The piece was meant to be played at the Philips Pavilion at the Brussels World's Fair in 1958–designed by Le Corbusier–in a context which could be considered today as "multimedia." The 8 minute composition would be played and spatialized across the 300+ loudspeakers in the building.

John Chowning, an American composer and a Stanford professor, developed FM synthesis. Chowning also composed Turenas, a piece that is meant for a quadrophonic setup–four speakers. He wrote at length about the technicalities of that composition and that setup.

On the side of rock or noise artists, the examples are quite rare. It seems that in 1967, Pink Floyd performed a quadrophonic concert. However, further research about rock and quadrophonic compositions mostly lead to albums instead of performances. As the quadrophonic format never really obtained a mainstream success, even though some audiophiles adopted the format, it disappeared.

In the mid-1990s, the indie rock band Flaming Lips were touring constantly for their albums Transmissions from the Satellite Heart and Clouds Taste Metallic. Exhausted, the band took a break and decided to experiment with different types of compositions. Fascinated by the eerie ambiance and sound quality of concrete parkings, Wayne Coyne–singer–lead the band to what is now known as The Parking Lot Experiments. People would be invited to bring their car to those parking lots, where the band would provide tapes. Dozens of people were then directed by the band members to play those tapes in a certain order or sync. Later on, the band went on to create The Boom-Box Experiments, where the band would still distribute tapes and direct the audience, but this time with provided tape players in a concert venue context. All those experiments inspired the band to compose the album Zaireeka, a 4-CD album that is meant to be played in as many sound systems–which means an octophonic album. Even though some listening sessions popped up here and there since the album release, the band never meant for these pieces to be performed live.

Fast forward to the early 2000s, the French math rock band Chevreuil actually had a different way of diffusing sound. Most music performances usually take place in a setting where the performers are facing the audience, projecting their music forwards, towards the audience. The French duo took a different approach, placing their instruments in the middle of the audience, drummer facing the guitarist/keyboardist, and surrounding themselves with amplifiers. Additionally, the guitars/keyboards are oftentimes played in a loop, which the musician moves from amp to amp as the pieces progress. This setup also allows for the audience to chose their vantage point, or even to move during the performance.

More recently, the Montréal rock band Solids have sometimes chosen this configuration for their performances.

This setup is oftentimes complicated for a band to chose. Some venues can be quite restrictive in what the allow the artists to do, preventing experimentation. Even if they could, not all performers would have enough space to play in such a context. Worse, it could sometimes be a security issue for the performers to do so.

As we can see from those examples, spatialization is not a compositional tool that is easily chosen by even the most experimental rock/noise artists.

Artistic Intent

My practice as a musician and performer revolves around creating moods and emotions, not communicating messages.

Post-rock means bands that use guitars but in non rock ways, as timbre and texture rather than riff and powerchord. It also means bands that augment rock's basic guitar-bass-drums lineup with digital technology such as samplers and sequencers, or tamper with the trad rock lineup but prefer antiquated analog synths and nonrock instrumentation.

By placing the audience in the middle of the sound diffusion–rather than projecting sound towards them from a stage–I hope the audience can be immersed more completely in the moods and melodies of the piece.

What happens in “direct” highlights the present. Live music, produced live and where we are present, is among the phenomena which have the property of amplifying our feeling of the present moment.

I want the audience not simply have people look at a performance on a stage, but to feel the music from all around, akin to when musicians practice in studio, surrounded by their instruments and amplifiers.

The fact of becoming aware that the form is coming into being, and how it is doing that, turns the attention of the witness to their own present time, implicates them.

Technical Approach

As we have seen in the introduction, choosing to perform a multiple loudspeakers piece is not an option chosen easily or randomly. Rock musicians–guitarists, bassists, and keyboardists mostly–have an array of useful tools that enable them to modify their sound without hindering their performance. One of the most used tool is a nicknamed a "stompbox"–named so because the musician can stomp on the box, which is built solidly enough. It is a small box that contains electronics that usually single-purpose sound-modifying electronics: distortion, reverb, delay, etc. It's a black box of sorts: an audio signal comes in, is modified–potentiometers and buttons on the box allow to choose a certain range of settings–and the audio signal comes out modified. The modular nature of such a tool allows musicians to combine and chain sound modifications in near infinite possibilities.

The visual programming software Max works in a similar fashion. Whereas guitar pedals–the stompboxes mentioned above–are usually made with for single purpose, Max allows a greater flexibility as it not only can modify audio signal, it can also be used to control events. By default, interaction with a software is done on a computer, traditionally meaning a keyboard and a screen. This configuration is less than ideal to allow flexibility for a performer whose hands are busy with a guitar. As such, MIDI controllers–a pedalboard and a console with sliders and pots–will be used as human interfaces to interact with the Max patch–the program written for the piece.

At this time, the software and controls are developed for this specific composition, not a general-purpose tool. The controls are meant so that the performer can affect events and settings as the composition progresses. Similar to how a traditional composition has a written score, this multi-channel composition has a written diffusion score, as a reference for the performer to trigger spatialization and other events as the composition is performed, and to be able to repeat the performance in a relatively similar fashion from one performance to the next.

Audio Signal Flow

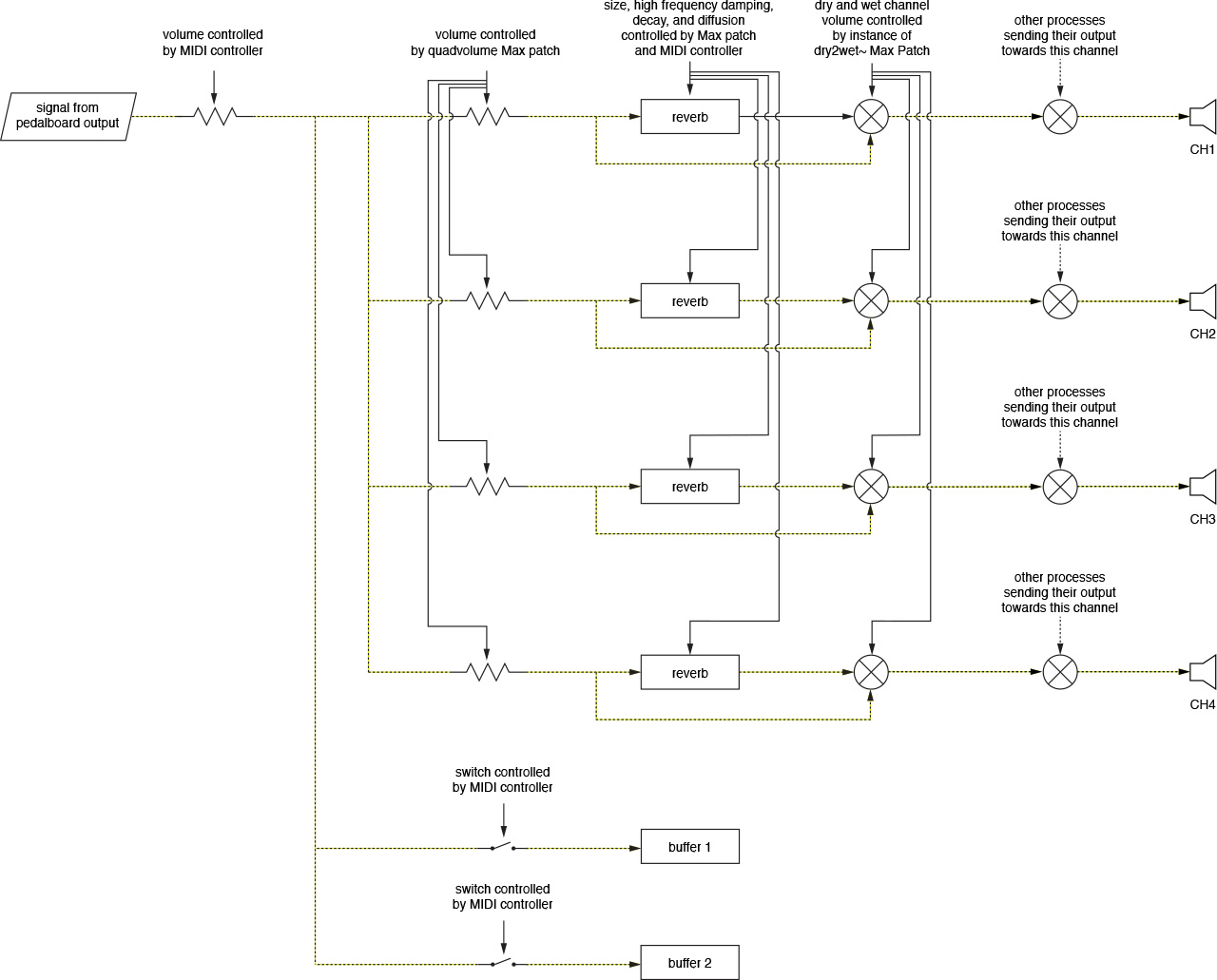

The piece is meant to be played on guitar, and diffused onto a quadrophonic setup. A single prerecorded part is used, everything else is played and looped live. Fig. 3, seen below, details how the single input of the guitar is routed in the software, from the quadrophonic mapping to the recording of loops in buffer.

The input signal's volume is available for the performer to control. After that, the signal is copied into multiple parallel paths.

One copy of the signal–let's call it the monitor–is passed directly through the quadvolume Max patch–detailed further below–which allows to control the volume of the output signal to four channels for spatialization. Each of the four channels is then passed through an instance of a reverb patch. The clean signal is merged with the reverbed signal in the dry2web~ Max patch–also detailed below–and is then sent towards the appropriate loudspeaker channel.

The other signal copies are connected to the buffers that are meant for recording. The MIDI pedalboard allows the performer to control which buffer should start recording the signal.

In parallel to the input audio signal flow, there is a prerecorded buffer–a sample–whose signal flow is detailed in fig. 4 below.

The sample's playback speed can be controlled by a MIDI controller and a Max patch. After that, the signal is split into parallel paths, in the same way as the monitor audio signal. The output for each channel is then merged with the monitor signals before being outputted to the loudspeakers channel.

The other buffers into which the input audio signal is recorded are routed the same way, and then merged before output as well. The figures 5 to 8 below illustrate that point.

Fig. 5: Melody audio signal flow |  Fig. 6: Spatial decoration audio signal flow |

Fig. 7: Surreal audio signal flow |  Fig. 8: Bass line audio signal flow |

Max Patches

As explained in the introduction, this project is an experiment in creating a tool to allow a performer to play and spatialize sound and music.

The Max visual programming environment has an option to display only chosen user interface (UI) elements in what it calls a "presentation mode". Developing the data flow and presenting only relevant information to the performer is quite similar to how guitar pedals work, thus helping the musician during a performance.

Main Program

The main program contains the audio signal flows–detailed in figures 3 to 8 above–as well as some additional logic. Everything is encapsulated in a subpatch, so that the main interface is not cluttered with everything at once. An overview of all subpatches is visible at the top of this page. Although Max processes objects from right-to-left, bottom-to-top, let's analyze the image above in the reading order most used in Western culture: left-to-right, top-to-bottom.

The sound input block at the top left, and its monitor subpatch, encapsulate the audio signal flow of fig. 3, although it does not handle the buffers at the bottom of the figure.

The sound output block handles the muxed volume sent to the loudspeakers.

The remote zero sl block contains a subpatch that handles the MIDI control signals sent by the controller of the same name. Some interface elements are presented (init/reset buttons, name of connected controller, MIDI channel, and MIDI value) so that the performer can have a quick overview that all is functional. These controls signals affect different patches at different moments of the performance, as noted in each of the audio signal flows figures above.

The fcb1010 block is similar, as it contains a subpatch that handles the MIDI control signals sent by the FCB1010 pedalboard. Here also, minor UI elements are available for the performer, and control signals affect different processes.

The master loop block represents the contents of fig. 4. The melody loop and the loop 03 blocks contain the figures 5 through 8. While their audio signal flow diagrams are identical, there are some minor logical differences in their Max patches counterparts, as control triggers and audio effect settings are slightly different from one another.

The master loop requires the performer to load a static prerecorded audio file. Once the file is loaded and starts playing, a progress bar is updated to inform the performer of the playback. It serves as a visual metronome.

The melody loop patch is where the buffer 1 from fig. 3 is used. In order to trigger the recording into the buffer–a groove~ Max object–, the performer must enable the functionality. Once enable, the recording will start when the static audio file loop restarts at its 0 ms point, until its end. The performer is then responsible to play something that can loop within that duration. Avoiding a click in the loop is also the performer's responsibility, as this version of the buffer recording does not handle overdubbing. Once the recording is complete, its playback starts automatically and remains in sync with the master loop–see fig. 5. Two additional groove~ objects exist–represented in figures 6 and 7–to scrub the same buffer, but at different playback speeds, with different reverb settings, and with different quadrophonic diffusion settings.

The loop 03 block, also named the bass line, is much simpler. It contains buffer 2 from fig. 3, and recording is triggered by the performer, the same way as buffer 1. Here also, playback starts automatically after recording, adding voices to the composition in real time. Where the melody loop block duplicates buffer scrubs, the bass line is unique. There are however moments where the playback is controlled through preset loop points rather than letting the playback run its course. More details will be provided about this in the Compositional Structure section below.

The target sample block informs the performer of which buffer is the target for the recording. Initially the performer would have also been responsible for what is now the prerecorded master loop. We will see how this was handled in the Limitations, Difficulties, and Resolutions section below.

Before the full program was completed, triggering recording and other events was handled on-screen. The playback block had a few more buttons, but now only one remains: one to stop all playback. Even so, that button is of no use anymore, as that functionality can now be triggered by the pedalboard.

The score block is a list of events and controls available for the musician to use during the progression of the performance. More details will be provided about this in the Compositional Structure section below.

quadvolume

As detailed above, there is a way to develop reusable patches for Max patches, also known as modular programming. These can be within the main patch itself, but can also be external files. Rather than copy and paste the same patch many times, it is more efficient to benefit from Max functionalities to create an external patch, and load it in the main one.

As such, quadvolume has been externalized–and open-sourced–, so it can be reused easily.

The quadvolume object itself takes two inputs: values (0-127) for the left-right panning, as well as values (0-127) for the front-back panning. As Max is a visual programming software, it may be best to use the quadvolume object in tandem with the pictslider, shown above in fig. 10.

The panning that the pictslider controls is meant to be read with the performance setup picture above in fig. 11. The bottom of the component represents the front of the audience, where the performer stands. Each corner of the component represent a speaker position. If the cursor is in the center of the component, all speaker volumes are at full output. Moving the cursor from the center towards a speaker position reduces the opposite speaker volume, thus moving a sound in space. This interface makes it easy to visualize where sounds move in space.

dry2wet~

In the context of guitar pedals, dry and wet respectively refer to an unmodified audio signal, and to an audio signal onto which the sound effect (distortion, reverb, delay, etc.) is applied.

As mentioned before, Max processes can be chained like guitar pedals, however there is no built-in way to balance dry and wet audio signals.

Here as well, the dry2wet~ patch has been externalized and open-sourced. While not part of spatialization per se, this patch is useful as a more reverbed signal can seem farther than a dry signal.

Limitations, Difficulties, and Resolutions

Buffers

As underlined in the main program description, the performer was initially supposed to also play and record what is now the master loop. Looping in Max relies by default on the length of the buffer. There was no way to know perfectly in advance the length of the master buffer, as even with a metronome, a musician cannot easily play for a specific amount of milliseconds. To remedy that, all buffers were originally set for some 20 seconds, and loop points were manually calculated for when the recording started and stopped, so that looping could be seamless.

Issues appeared when other buffers' playback speed was changed: the loop points were ignored, causing long silences, as the buffer was only partly filled. The first attempt to fix thi issue was to resize the buffers, however Max empties a buffer when it is resized.

It was then chosen that using a prerecorded audio file would resolve a few issues, and allow for a more creative control of the introduction of the composition. When the audio file would be loaded, the other buffers would then resize to the file's length, ensuring loops would all work.

Overdubbing

In the context of looping, overdubbing refers adding recordings to a buffer without deleting it. Most guitar pedals–the Boss Loop Station series for example–offer this functionality. Recording to Max buffers is much more bare and by default does not do overdubs.

In a way, this issue becomes a strength for spatializing loops in a live performance: if all recordings where added to the same buffer, there would be no way to independently move discreet sound sources across the different loudspeakers.

Reverb

As seen in figures 3 to 8, reverb components are duplicated everywhere for each output channel. Originally, the reverb was applied only to the source, which was then diffused across channels.

While practicing the piece, it did not feel right that a reverbed sound would carry its reverb along, instead of the reverb remaining in place, being caused by the source moving. It then made sense to duplicate the reverb so it was assigned to each output. The amplitude of the signal passing through an output would then cause reverberations, causing a much more natural feeling of a moving sound. I discovered this while practicing the piece, but I should have remembered that this is exactly what Chowning underlined in his text Turenas: the realization of a dream.

References and Related Readings

Books and Papers

- Boursier-Mougenot, Céleste (2008). états seconds. Arles, France: Analogues.

- Chowning, John. (2011). Turenas: the realization of a dream. Stanford: Stanford University.

- McLuhan, Marshall (1964). Understanding Media: The Extensions of Man (3rd ed.). Toronto, Canada: Signet.

- Reynolds, Simon. Post-Rock. In Cox, C and Warner, D. (Ed.), Audio Culture - Readings in Modern Music (pp. 358-361). 2005, Continuum.

- Richardson, Mark (2010). Flaming Lips' Zaireeka. New York, USA: Continuum.

- Vande Gorne, Annette. (2002). L’interprétation spatiale. Essai de formalisation méthodologique. Lille: Université de Lille-3.

Videos

- BAND -OSL-. (2012, January 17). OSL with Chevreuil - Turbofonte. [Video file]. Retrieved from https://www.youtube.com/watch?v=Yt7Qzb3Got0

- EssyInTheSky. (2012, March 23). John Chowning - Turenas. [Video file]. Retrieved from https://www.youtube.com/watch?v=71Y9X9WKAQU

- TheAlbeghart. (2013, September 29). The Flaming Lips - Zaireeka [FULL ALBUM] [HQ]. [Video file]. Retrieved from https://www.youtube.com/watch?v=71Y9X9WKAQU

Wikipedia and Online Articles

- Sandeep Bhagwati. (n.d.). Retrieved from http://matralab.hexagram.ca/people/sandeep-bhagwati/

- Calore, M. (2009, May 12). May 12, 1967: Pink Floyd Astounds With ‘Sound in the Round’. Retrieved from https://www.wired.com/2009/05/dayintech_0512/

- Chevreuil (groupe). (n.d.). In Wikipedia. Retrieved from https://fr.wikipedia.org/wiki/Chevreuil_(groupe)

- John Chowning. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/John_Chowning

- Clouds Taste Metallic. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Clouds_Taste_Metallic

- Le Corbusier. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Le_Corbusier

- Wayne Coyne. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Wayne_Coyne

- Brian Eno. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Brian_Eno

- The Flaming Lips. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/The_Flaming_Lips

- Frequency modulation synthesis. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Frequency_modulation_synthesis

- groove~, Variable-rate looping sample playback. (n.d.). Retrieved from https://docs.cycling74.com/max5/refpages/msp-ref/groove~.html

- Modular programming. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Modular_programming

- Musique concrète. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Musique_concr%C3%A8te

- patcher, Create a subpatch within a patch. (n.d.). Retrieved from https://docs.cycling74.com/max5/refpages/max-ref/patcher.html

- Overdubbing. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Overdubbing

- pictslider, Picture-based slider control. (n.d). Retrieved from https://docs.cycling74.com/max5/refpages/max-ref/pictslider.html

- Philips Pavillion. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Philips_Pavilion

- Poème électronique. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Po%C3%A8me_%C3%A9lectronique

- Solids. (n.d). Retrieved from https://solids.bandcamp.com/

- Tutorial 5: Message Order and Debugging. (n.d.). Retrieved from https://docs.cycling74.com/max5/tutorials/max-tut/basicchapter05.html

- Tutorial 14: Encapsulation. (n.d.). Retrieved from https://docs.cycling74.com/max5/tutorials/max-tut/basicchapter14.html

- Transmissions from the Satellite Heart. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Transmissions_from_the_Satellite_Heart

- Edgard Varèse. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Edgard_Var%C3%A8se

- Zaireeka. (n.d.). In Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Zaireeka

Credits

Live performance photos by Michael Khayata.

Role

Design, programming, sound design, and live performance

Context

While studying Intermedia and Cyber Arts at Concordia University

Circa

2017